Maximum Margin Criterion (MMC) is a linear supervised dimension reduction method that maximizes average margin between classes. The cost function is defined as $$trace(S_b - S_w)$$ where \(S_b\) is an overall variance of class mean vectors, and \(S_w\) refers to spread of every class. Note that Principal Component Analysis (PCA) maximizes total scatter, \(S_t = S_b + S_w\).

do.mmc(X, label, ndim = 2)Arguments

- X

an \((n\times p)\) matrix whose rows are observations and columns represent independent variables.

- label

a length-\(n\) vector of data class labels.

- ndim

an integer-valued target dimension.

Value

a named Rdimtools S3 object containing

- Y

an \((n\times ndim)\) matrix whose rows are embedded observations.

- projection

a \((p\times ndim)\) whose columns are basis for projection.

- algorithm

name of the algorithm.

References

Li H, Jiang T, Zhang K (2006). “Efficient and Robust Feature Extraction by Maximum Margin Criterion.” IEEE Transactions on Neural Networks, 17(1), 157--165.

Examples

# \donttest{

## use iris data

data(iris, package="Rdimtools")

subid = sample(1:150, 50)

X = as.matrix(iris[subid,1:4])

label = as.factor(iris[subid,5])

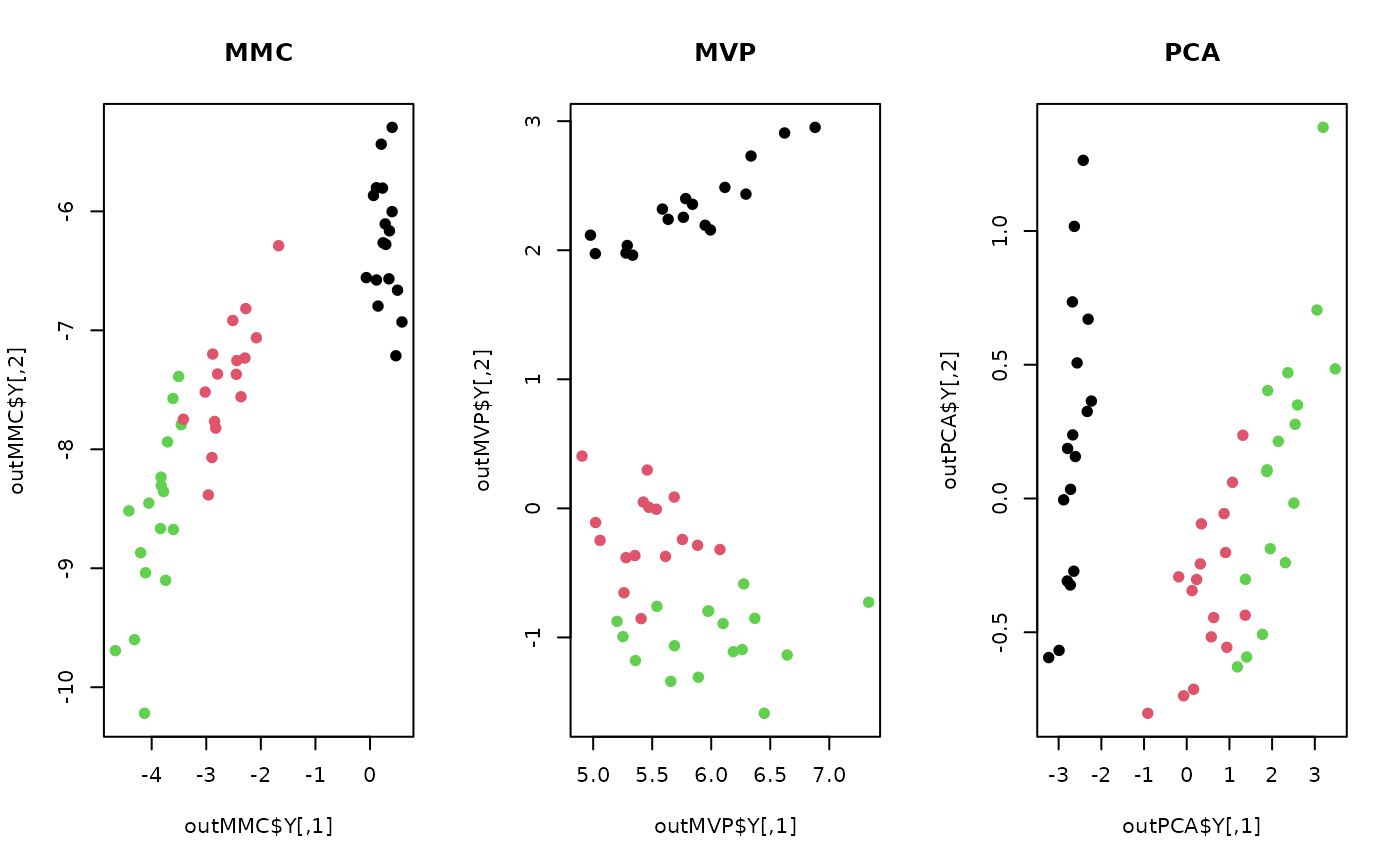

## compare MMC with other methods

outMMC = do.mmc(X, label)

outMVP = do.mvp(X, label)

outPCA = do.pca(X)

## visualize

opar <- par(no.readonly=TRUE)

par(mfrow=c(1,3))

plot(outMMC$Y, pch=19, col=label, main="MMC")

plot(outMVP$Y, pch=19, col=label, main="MVP")

plot(outPCA$Y, pch=19, col=label, main="PCA")

par(opar)

# }

par(opar)

# }