do.ldakm is an unsupervised subspace discovery method that combines linear discriminant analysis (LDA) and K-means algorithm.

It tries to build an adaptive framework that selects the most discriminative subspace. It iteratively applies two methods in that

the clustering process is integrated with the subspace selection, and continuously updates its discrimative basis. From its formulation

with respect to generalized eigenvalue problem, it can be considered as generalization of Adaptive Subspace Iteration (ASI) and Adaptive Dimension Reduction (ADR).

do.ldakm(

X,

ndim = 2,

preprocess = c("center", "scale", "cscale", "decorrelate", "whiten"),

maxiter = 10,

abstol = 0.001

)Arguments

- X

an \((n\times p)\) matrix or data frame whose rows are observations.

- ndim

an integer-valued target dimension.

- preprocess

an additional option for preprocessing the data. Default is "center". See also

aux.preprocessfor more details.- maxiter

maximum number of iterations allowed.

- abstol

stopping criterion for incremental change in projection matrix.

Value

a named list containing

- Y

an \((n\times ndim)\) matrix whose rows are embedded observations.

- trfinfo

a list containing information for out-of-sample prediction.

- projection

a \((p\times ndim)\) whose columns are basis for projection.

References

Ding C, Li T (2007). “Adaptive Dimension Reduction Using Discriminant Analysis and K-Means Clustering.” In Proceedings of the 24th International Conference on Machine Learning, 521--528.

Examples

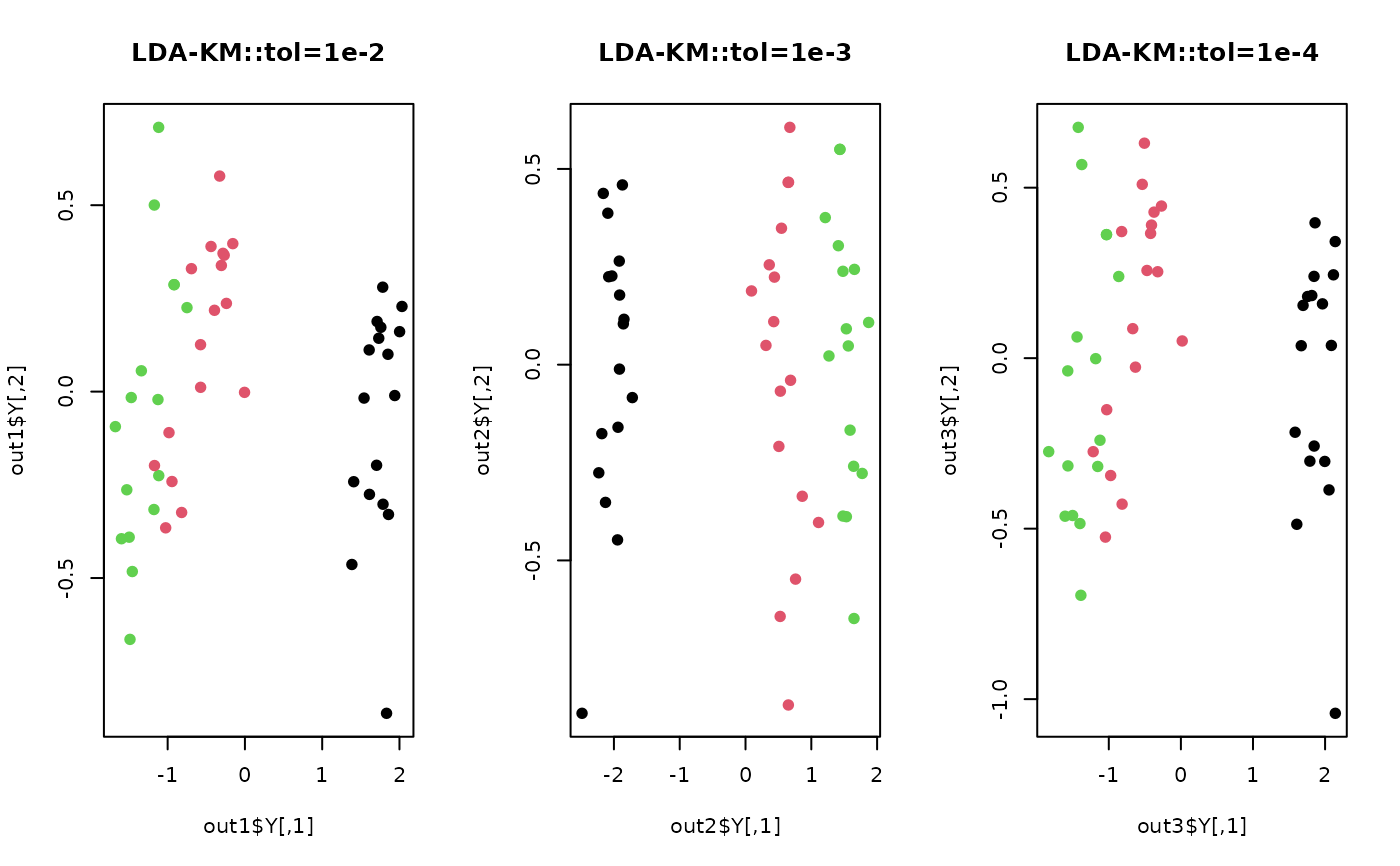

## use iris dataset

data(iris)

set.seed(100)

subid <- sample(1:150, 50)

X <- as.matrix(iris[subid,1:4])

lab <- as.factor(iris[subid,5])

## try different tolerance level

out1 = do.ldakm(X, abstol=1e-2)

out2 = do.ldakm(X, abstol=1e-3)

out3 = do.ldakm(X, abstol=1e-4)

## visualize

opar <- par(no.readonly=TRUE)

par(mfrow=c(1,3))

plot(out1$Y, pch=19, col=lab, main="LDA-KM::tol=1e-2")

plot(out2$Y, pch=19, col=lab, main="LDA-KM::tol=1e-3")

plot(out3$Y, pch=19, col=lab, main="LDA-KM::tol=1e-4")

par(opar)

par(opar)