In the standard, convex RSR problem (do.rsr), row-sparsity for self-representation is

acquired using matrix \(\ell_{2,1}\) norm, i.e, \(\|W\|_{2,1} = \sum \|W_{i:}\|_2\). Its non-convex

extension aims at achieving higher-level of sparsity using arbitrarily chosen \(\|W\|_{2,l}\) norm for

\(l\in (0,1)\) and this exploits Iteratively Reweighted Least Squares (IRLS) algorithm for computation.

do.nrsr(

X,

ndim = 2,

expl = 0.5,

preprocess = c("null", "center", "scale", "cscale", "whiten", "decorrelate"),

lbd = 1

)Arguments

- X

an \((n\times p)\) matrix or data frame whose rows are observations and columns represent independent variables.

- ndim

an integer-valued target dimension.

- expl

an exponent in \(\ell_{2,l}\) norm for sparsity. Must be in \((0,1)\), or \(l=1\) reduces to RSR problem.

- preprocess

an additional option for preprocessing the data. Default is "null". See also

aux.preprocessfor more details.- lbd

nonnegative number to control the degree of self-representation by imposing row-sparsity.

Value

a named list containing

- Y

an \((n\times ndim)\) matrix whose rows are embedded observations.

- featidx

a length-\(ndim\) vector of indices with highest scores.

- trfinfo

a list containing information for out-of-sample prediction.

- projection

a \((p\times ndim)\) whose columns are basis for projection.

References

Zhu P, Zhu W, Wang W, Zuo W, Hu Q (2017). “Non-Convex Regularized Self-Representation for Unsupervised Feature Selection.” Image and Vision Computing, 60, 22--29.

See also

Examples

# \donttest{

## use iris data

data(iris)

set.seed(100)

subid = sample(1:150, 50)

X = as.matrix(iris[subid,1:4])

label = as.factor(iris[subid,5])

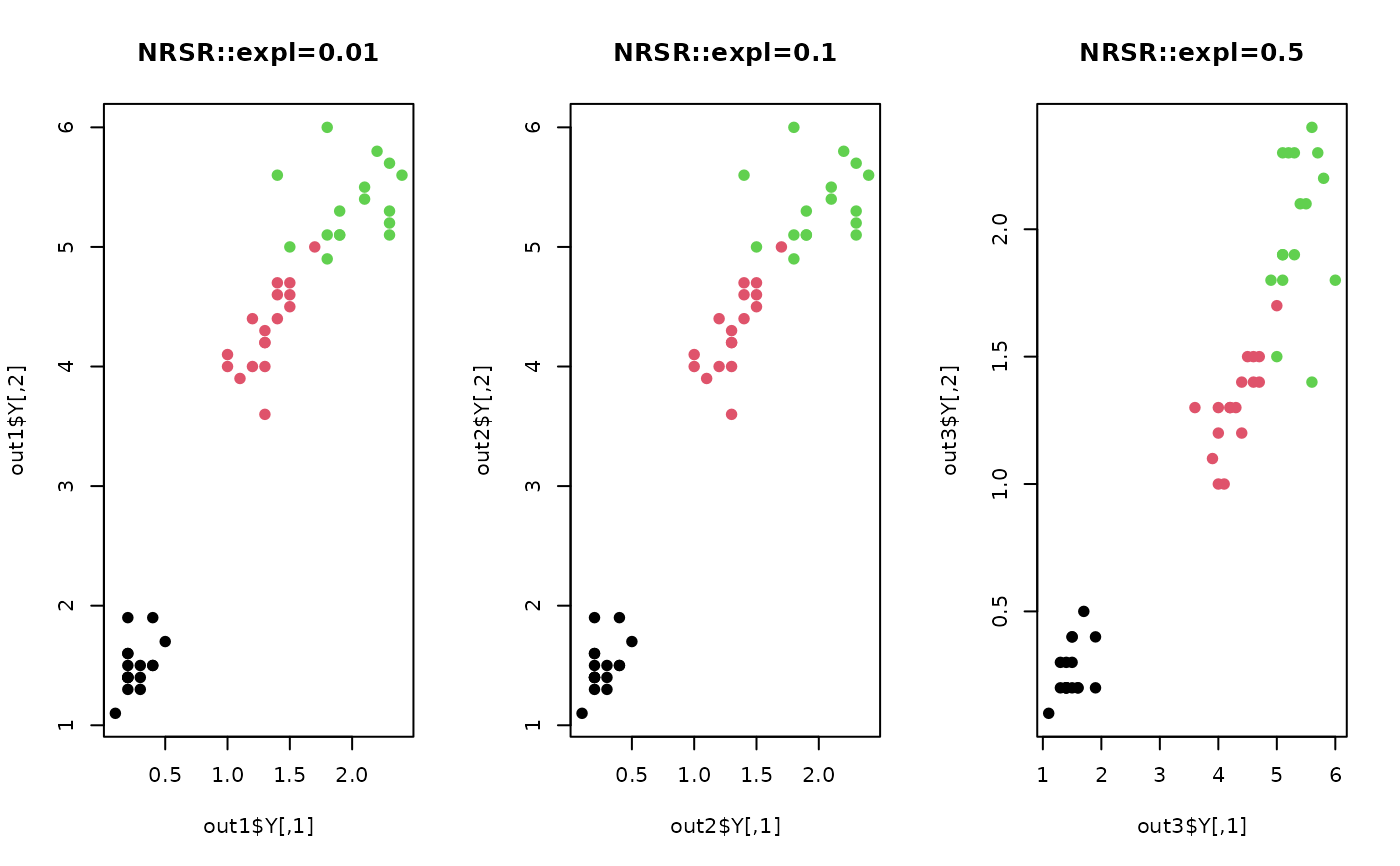

#### try different exponents for regularization

out1 = do.nrsr(X, expl=0.01)

out2 = do.nrsr(X, expl=0.1)

out3 = do.nrsr(X, expl=0.5)

#### visualize

opar <- par(no.readonly=TRUE)

par(mfrow=c(1,3))

plot(out1$Y, pch=19, col=label, main="NRSR::expl=0.01")

plot(out2$Y, pch=19, col=label, main="NRSR::expl=0.1")

plot(out3$Y, pch=19, col=label, main="NRSR::expl=0.5")

par(opar)

# }

par(opar)

# }