Sliced Inverse Regression (SIR) is a supervised linear dimension reduction technique. Unlike engineering-driven methods, SIR takes a concept of central subspace, where conditional independence after projection is guaranteed. It first divides the range of response variable. Projection vectors are extracted where projected data best explains response variable.

Arguments

- X

an \((n\times p)\) matrix or data frame whose rows are observations and columns represent independent variables.

- response

a length-\(n\) vector of response variable.

- ndim

an integer-valued target dimension.

- h

the number of slices to divide the range of response vector.

- preprocess

an additional option for preprocessing the data. Default is "center". See also

aux.preprocessfor more details.

Value

a named list containing

- Y

an \((n\times ndim)\) matrix whose rows are embedded observations.

- trfinfo

a list containing information for out-of-sample prediction.

- projection

a \((p\times ndim)\) whose columns are basis for projection.

References

Li K (1991). “Sliced Inverse Regression for Dimension Reduction.” Journal of the American Statistical Association, 86(414), 316.

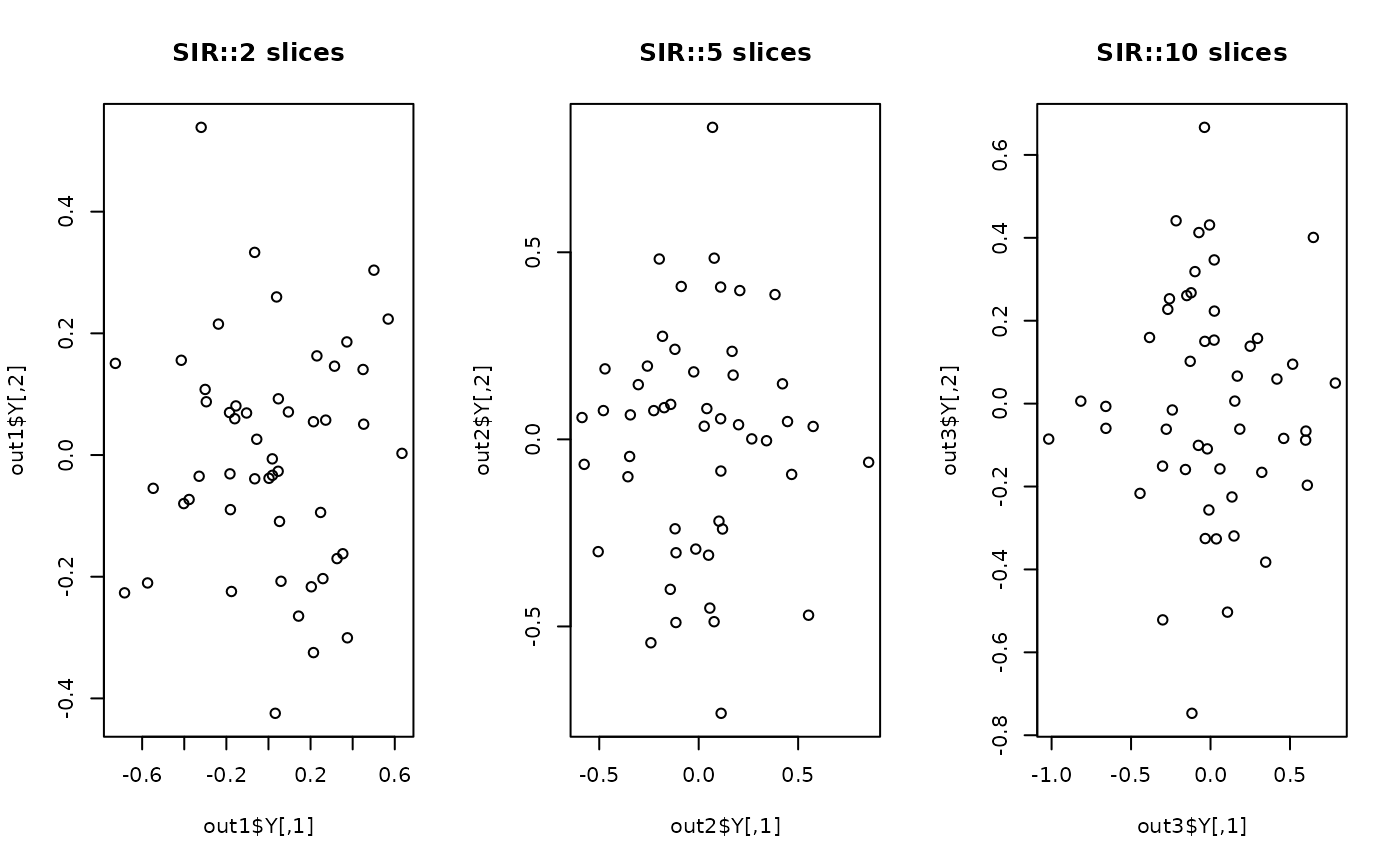

Examples

## generate swiss roll with auxiliary dimensions

## it follows reference example from LSIR paper.

set.seed(100)

n = 50

theta = runif(n)

h = runif(n)

t = (1+2*theta)*(3*pi/2)

X = array(0,c(n,10))

X[,1] = t*cos(t)

X[,2] = 21*h

X[,3] = t*sin(t)

X[,4:10] = matrix(runif(7*n), nrow=n)

## corresponding response vector

y = sin(5*pi*theta)+(runif(n)*sqrt(0.1))

## try with different numbers of slices

out1 = do.sir(X, y, h=2)

out2 = do.sir(X, y, h=5)

out3 = do.sir(X, y, h=10)

## visualize

opar <- par(no.readonly=TRUE)

par(mfrow=c(1,3))

plot(out1$Y, main="SIR::2 slices")

plot(out2$Y, main="SIR::5 slices")

plot(out3$Y, main="SIR::10 slices")

par(opar)

par(opar)