Also known as multilinear regression or semipenalized CCA, Orthogonal Partial Least Squares (OPLS)

was first used to perform multilinear ordinary least squares. In its usage, unlike PLS or CCA,

OPLS does not rely on projected variance of response -or, data2. Instead, it exploits projected

variance of input - covariance of data1 and relates it under cross-covariance setting. Therefore,

OPLS only returns projection information of data1, just like any other unsupervised methods in our package.

do.opls(data1, data2, ndim = 2)Arguments

- data1

an \((n\times N)\) data matrix whose rows are observations.

- data2

an \((n\times M)\) data matrix whose rows are observations.

- ndim

an integer-valued target dimension.

Value

a named list containing

- Y

an \((n\times ndim)\) matrix of projected observations from

data1.- projection

an \((N\times ndim)\) whose columns are loadings for

data1.- trfinfo

a list containing information for out-of-sample prediction for

data1.- eigvals

a vector of eigenvalues for iterative decomposition.

References

Barker M, Rayens W (2003). “Partial Least Squares for Discrimination.” Journal of Chemometrics, 17(3), 166--173.

See also

Examples

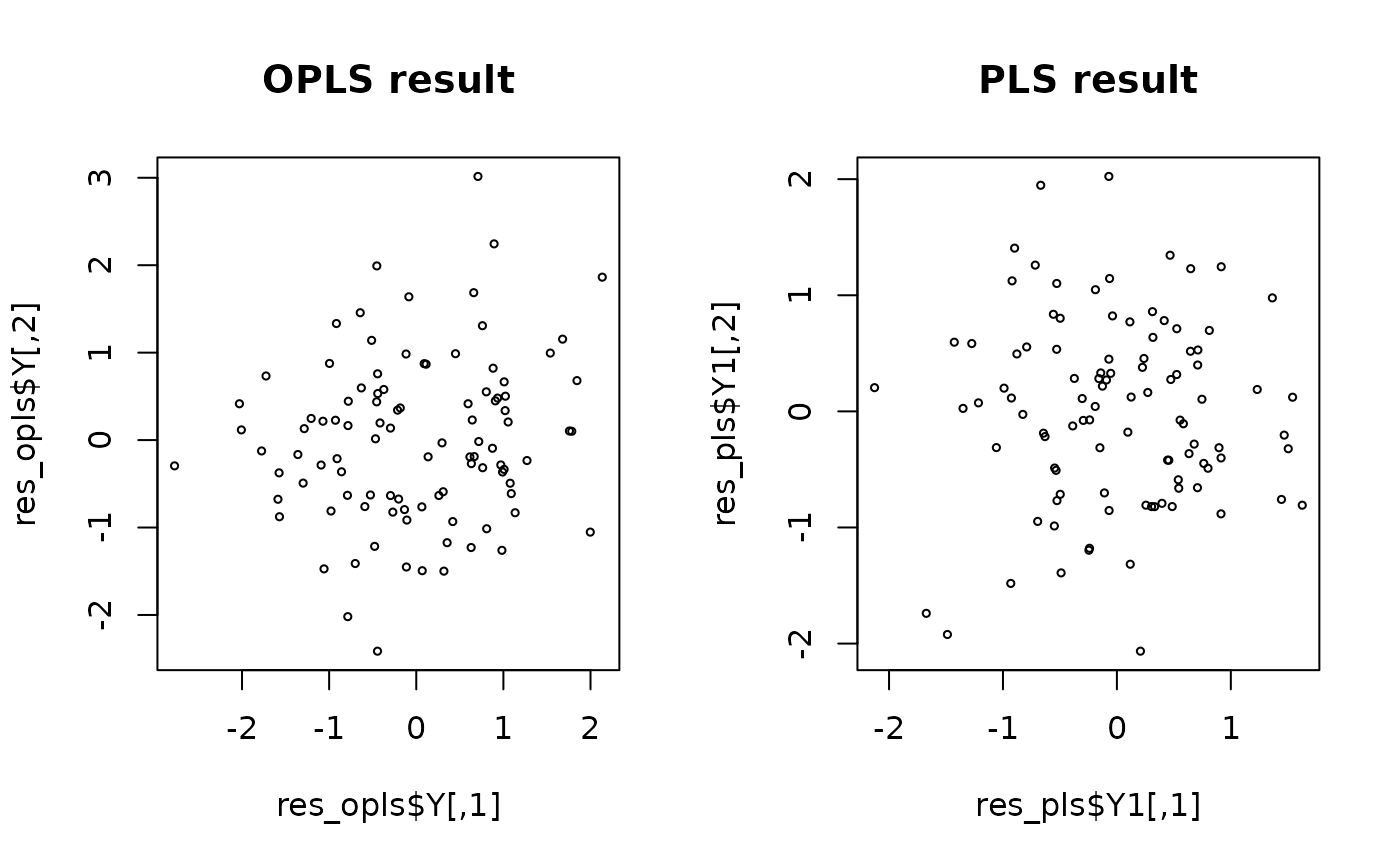

## generate 2 normal data matrices

mat1 = matrix(rnorm(100*12),nrow=100)+10 # 12-dim normal

mat2 = matrix(rnorm(100*6), nrow=100)-10 # 6-dim normal

## compare OPLS and PLS

res_opls = do.opls(mat1, mat2, ndim=2)

res_pls = do.pls(mat1, mat2, ndim=2)

## visualize

opar <- par(no.readonly=TRUE)

par(mfrow=c(1,2))

plot(res_opls$Y, cex=0.5, main="OPLS result")

plot(res_pls$Y1, cex=0.5, main="PLS result")

par(opar)

par(opar)