Though it may sound weird, this method aims at finding discriminative features under the unsupervised learning framework. It assumes that the class label could be predicted by a linear classifier and iteratively updates its discriminative nature while attaining row-sparsity scores for selecting features.

do.udfs(

X,

ndim = 2,

lbd = 1,

gamma = 1,

k = 5,

preprocess = c("null", "center", "scale", "cscale", "whiten", "decorrelate")

)Arguments

- X

an \((n\times p)\) matrix or data frame whose rows are observations and columns represent independent variables.

- ndim

an integer-valued target dimension.

- lbd

regularization parameter for local Gram matrix to be invertible.

- gamma

regularization parameter for row-sparsity via \(\ell_{2,1}\) norm.

- k

size of nearest neighborhood for each data point.

- preprocess

an additional option for preprocessing the data. Default is "null". See also

aux.preprocessfor more details.

Value

a named list containing

- Y

an \((n\times ndim)\) matrix whose rows are embedded observations.

- featidx

a length-\(ndim\) vector of indices with highest scores.

- trfinfo

a list containing information for out-of-sample prediction.

- projection

a \((p\times ndim)\) whose columns are basis for projection.

References

Yang Y, Shen HT, Ma Z, Huang Z, Zhou X (2011). “L2,1-Norm Regularized Discriminative Feature Selection for Unsupervised Learning.” In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence - Volume Volume Two, IJCAI'11, 1589--1594.

Examples

## use iris data

data(iris)

set.seed(100)

subid = sample(1:150, 50)

X = as.matrix(iris[subid,1:4])

label = as.factor(iris[subid,5])

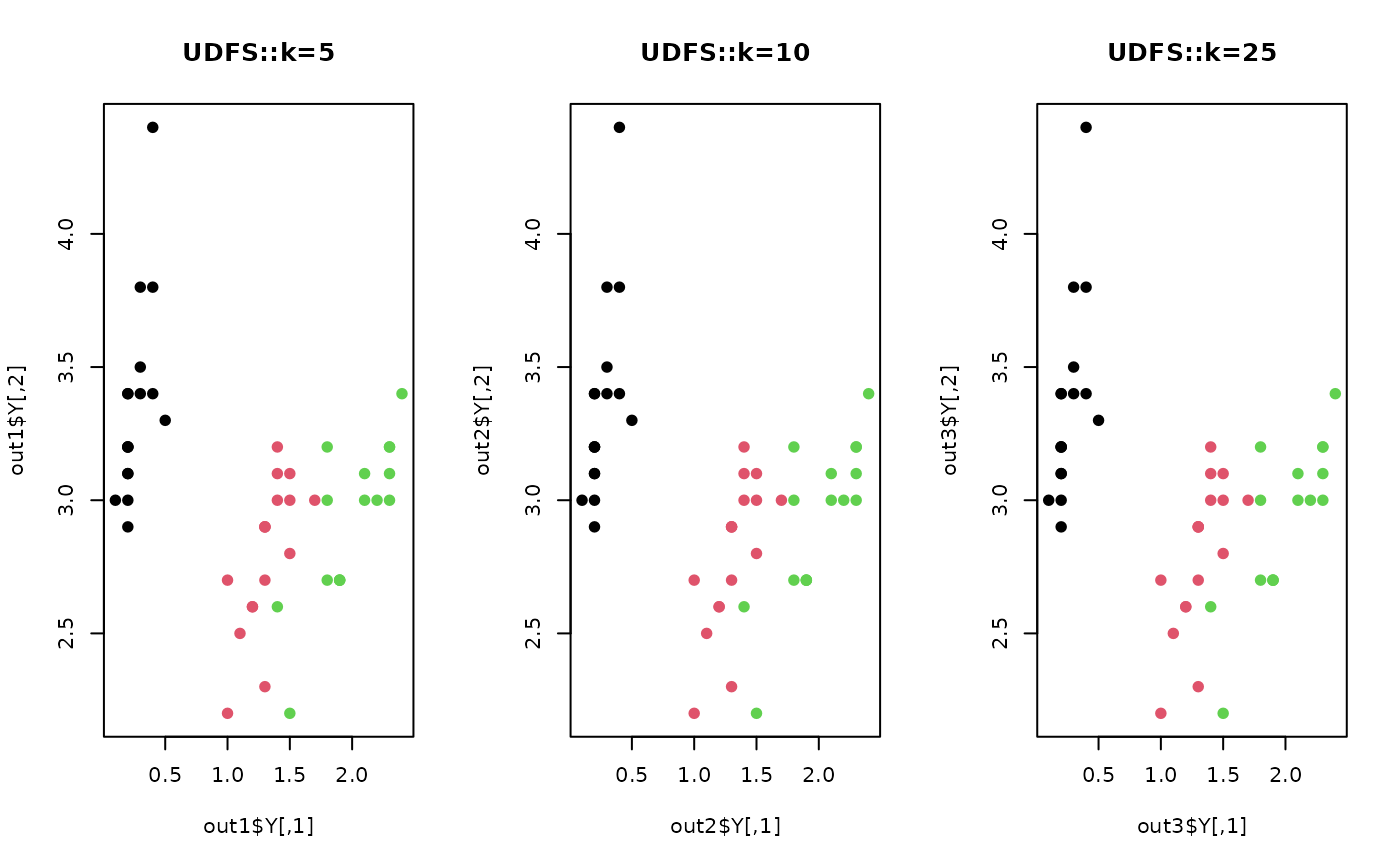

#### try different neighborhood size

out1 = do.udfs(X, k=5)

out2 = do.udfs(X, k=10)

out3 = do.udfs(X, k=25)

#### visualize

opar = par(no.readonly=TRUE)

par(mfrow=c(1,3))

plot(out1$Y, pch=19, col=label, main="UDFS::k=5")

plot(out2$Y, pch=19, col=label, main="UDFS::k=10")

plot(out3$Y, pch=19, col=label, main="UDFS::k=25")

par(opar)

par(opar)